- #How to install pyspark in python how to#

- #How to install pyspark in python driver#

- #How to install pyspark in python code#

- #How to install pyspark in python windows 7#

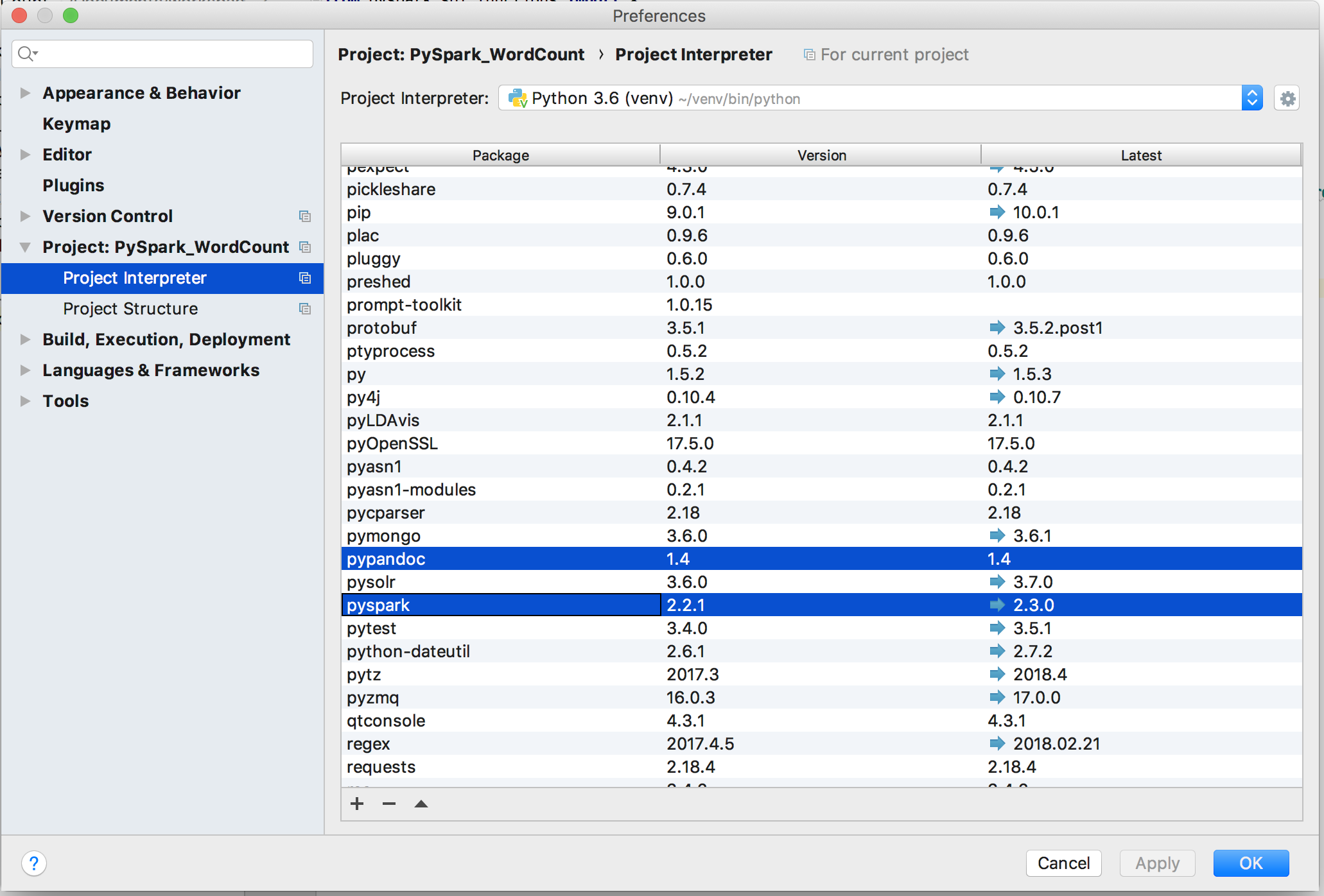

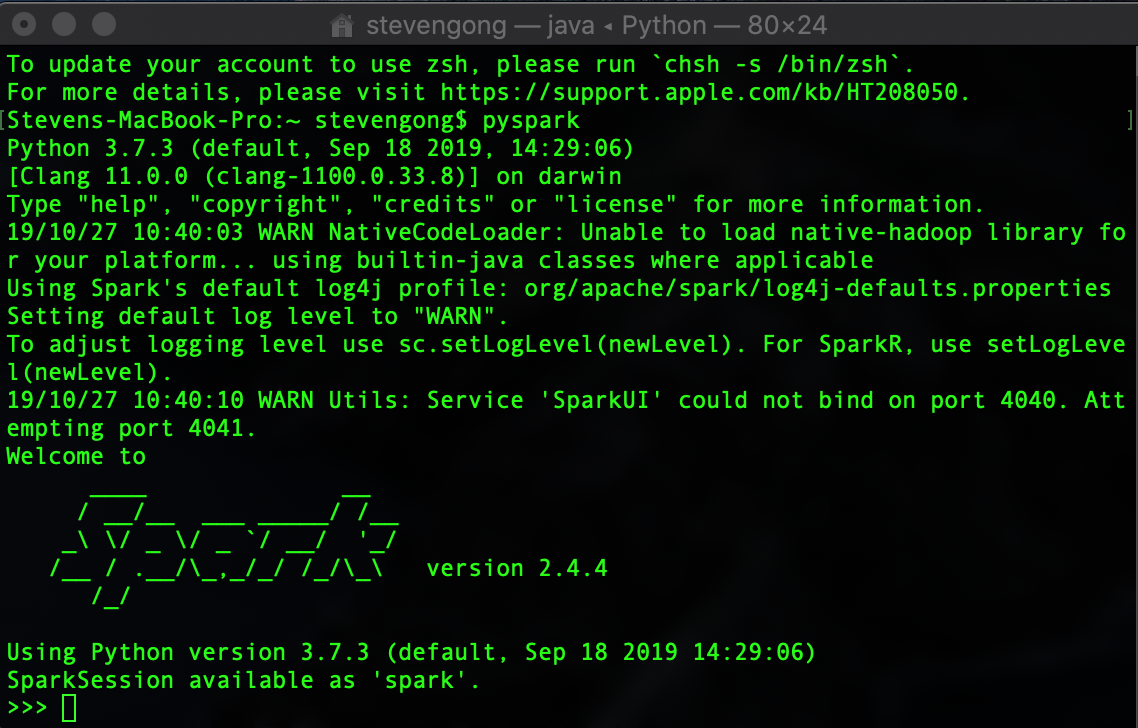

In this post, we will extend that set up to include Pyspark and also see how we can use Jupyter notebook with apache spark.

#How to install pyspark in python how to#

In our previous blog post, we covered how to set up apache spark locally on a linux based OS. For this reason, spark provides a python interface, called PySpark. Simply because python is very robust, flexible, easy to pick up and has a humungous number of libraries available for almost everything you want to do with it. Fall back to Windows cmd if it happens.PySpark Installation Guide with Jupyter NotebookĪ lot of people prefer to work with python over any JVM based languagues, especially data analysts and data scientists.

#How to install pyspark in python driver#

If you use Anaconda Navigator to open Jupyter Notebook instead, you might see a Java gateway process exited before sending the driver its port numberĮrror from PySpark in step C. To run Jupyter notebook, open Windows command prompt or Git Bash and run jupyter notebook. In my experience, this error only occurs in Windows 7, and I think it’s because Spark couldn’t parse the space in the folder name.Įdit (1/23/19): You might also find Gerard’s comment helpful: If JDK is installed under \Program Files (x86), then replace the Progra~1 part by Progra~2 instead. (Optional, if see Java related error in step C) Find the installed Java JDK folder from step A5, for example, D:\Program Files\Java\jdk1.8.0_121, and add the following environment variable Name

#How to install pyspark in python windows 7#

In Windows 7 you need to separate the values in Path with a semicolon between the values. In the same environment variable settings window, look for the Path or PATH variable, click edit and add D:\spark\spark-2.2.1-bin-hadoop2.7\bin to it. The variables to add are, in my example, Name

You can find the environment variable settings by putting “environ…” in the search box. For example, D:\spark\spark-2.2.1-bin-hadoop2.7\bin\winutils.exeĪdd environment variables: the environment variables let Windows find where the files are when we start the PySpark kernel. Move the winutils.exe downloaded from step A3 to the \bin folder of Spark distribution. For example, I unpacked with 7zip from step A6 and put mine under D:\spark\spark-2.2.1-bin-hadoop2.7 tgz file from Spark distribution in item 1 by right-clicking on the file icon and select 7-zip > Extract Here.Īfter getting all the items in section A, let’s set up PySpark.

tgz file on Windows, you can download and install 7-zip on Windows to unpack the. I recommend getting the latest JDK (current version 9.0.1). If you don’t have Java or your Java version is 7.x or less, download and install Java from Oracle. You can find command prompt by searching cmd in the search box. The findspark Python module, which can be installed by running python -m pip install findspark either in Windows command prompt or Git bash if Python is installed in item 2. Go to the corresponding Hadoop version in the Spark distribution and find winutils.exe under /bin. Winutils.exe - a Hadoop binary for Windows - from Steve Loughran’s GitHub repo. You can get both by installing the Python 3.x version of Anaconda distribution. I’ve tested this guide on a dozen Windows 7 and 10 PCs in different languages. In this post, I will show you how to install and run PySpark locally in Jupyter Notebook on Windows.

#How to install pyspark in python code#

When I write PySpark code, I use Jupyter notebook to test my code before submitting a job on the cluster.

0 kommentar(er)

0 kommentar(er)